Policy Evaluation Analysis For Roles of State in Asymmetric Partially Observable RL

By Ankit Sinha (RA, Khoury College of Computer Sciences, Northeastern University)

Advised by Prof. Christopher Amato & Andrea Baisero (Ph.D. Candidate 2025)

Introduction

Reinforcement Learning (RL) has achieved remarkable success in a variety of domains, from game playing to robotics, yet real-world environments often suffer from partial observability. In such cases, the agent’s decision is based not on the complete environment state, but only on partial observations gathered over time.

To address this, many RL systems use asymmetric learning setups, where privileged information (the true state) is available only during training, not during actual deployment. This idea is central to Offline Training / Online Execution (OTOE) where richer state data improves the critic’s understanding but does not directly influence action selection during execution.

Despite the growing success of asymmetric RL approaches, one fundamental question remains unanswered:

💭 Why does state-based asymmetry improve learning performance?

Is it because the state provides additional information?

Or because it serves as a powerful feature to stabilize learning?

Or perhaps it helps improve exploration and value estimation indirectly?

This research project investigates these questions through controlled fixed-policy evaluations across multiple partially observable environments.

Background

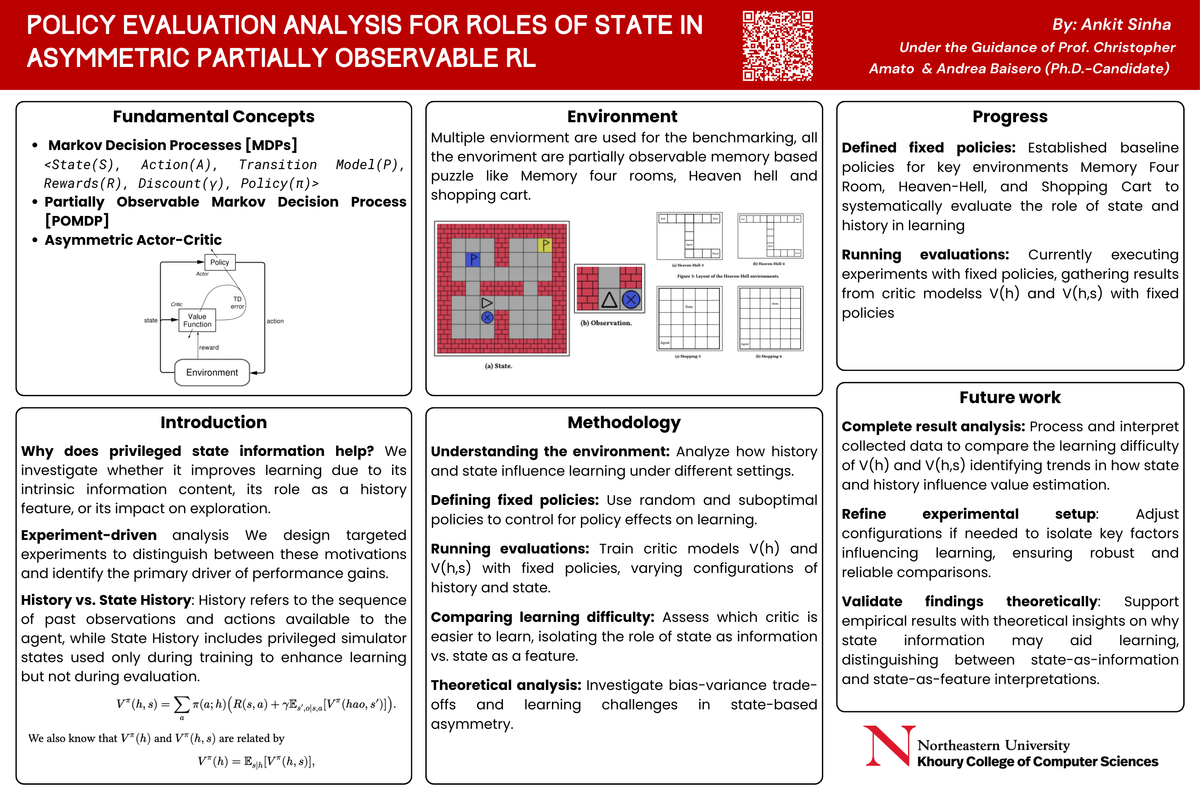

Markov Decision Process (MDP)

An MDP provides the foundation for RL:

- States (S): Situations the agent can be in

- Actions (A): Choices available to the agent

- Transition Model (P): Defines how actions move the agent between states

- Rewards (R): Feedback received for each action

- Policy (π): The strategy mapping states to actions

Partially Observable MDP (POMDP)

In reality, the agent rarely knows the true state. Instead, it receives:

- Observations (O): Noisy or incomplete glimpses of the true state

- History (H): The agent’s memory of past observations and actions

Asymmetric Actor–Critic

The actor chooses actions, while the critic learns to evaluate them.

In asymmetric RL, the critic gets access to privileged state information during training, even though the actor does not. This creates a unique asymmetry that often enhances learning stability and speed — but the reason why remains poorly understood.

Research Motivation

The core idea behind this project is to disentangle the role of state information.

We ask:

- Does privileged state information improve performance because it contains more informative content?

- Or does it simply make the critic’s learning process easier?

- How do history-based critics (V(h)) compare to history+state critics (V(h, s)) in terms of convergence, bias, and variance?

By running carefully controlled experiments using fixed policies, we can isolate these effects and uncover the true contribution of state-based asymmetry.

Experimental Environments

To ensure robust testing, we used several partially observable, memory-based benchmark environments, each representing a unique learning challenge:

- Memory Four Rooms – Navigation under incomplete observability

- Heaven & Hell – Requires long-term memory to distinguish reward sources

- Shopping Cart – Combines decision-making and memory retention tasks

Each environment is designed to challenge the agent’s ability to reason under uncertainty, making them ideal for studying asymmetry and partial observability.

Methodology

Step 1: Understanding the Environment

We first analyzed how history and state influence the learning process under different configurations of observability.

Step 2: Defining Fixed Policies

To avoid confounding effects from changing policies, we used fixed random and sub-optimal policies.

This ensured that any observed performance differences arise from the critic’s input configuration (history vs. history+state) — not from policy improvements.

Step 3: Training the Critics

We trained two critic models:

- V(h): Uses only observation history

- V(h, s): Uses both history and privileged state

Both were trained under identical conditions and policy trajectories.

Step 4: Comparing Learning Difficulty

We compared the learning curves, stability, and bias-variance characteristics of both critics to determine which configuration learns faster and generalizes better.

Progress and Current Status

Fixed Policies Defined:

Baseline policies were established for all three benchmark environments.

Evaluation Runs Ongoing:

We are currently executing critic training runs for both configurations (V(h) and V(h, s)).

Next Steps:

- Aggregate and analyze results from ongoing runs

- Study the bias–variance trade-offs theoretically

- Validate findings against existing literature on asymmetric RL

Preliminary Insights

Although final analysis is underway, early trends suggest that:

- State-based critics (V(h, s)) converge faster and are more stable under noisy observation conditions.

- History-only critics (V(h)) often struggle with long-term dependencies and partial observability.

- The advantage of privileged state may not come purely from its information content, but from its structural role in stabilizing gradient estimates and reducing variance in the critic’s learning process.

Future Work

- Comprehensive Result Analysis: Quantitatively evaluate convergence rates and value estimation accuracy for all environments.

- Theoretical Validation: Develop formal reasoning for why state information helps — distinguishing between state-as-information vs. state-as-feature roles.

- Extension to Policy Learning: Explore how asymmetric critics influence actual policy improvement (beyond fixed-policy evaluation).

- Generalization Tests: Evaluate on larger or more complex Dec-POMDPs to test scalability.

Broader Impact

Understanding why privileged information aids learning is not just an academic curiosity — it has real-world implications for:

- Safe RL: Where state access may be restricted at deployment time.

- Sim-to-Real Transfer: Where training simulations can expose more information than real sensors can.

- Collaborative Multi-Agent Systems: Where certain agents might have asymmetric views of the world.

By improving our theoretical and practical understanding of asymmetric learning, this work contributes to building smarter, safer, and more generalizable reinforcement learning systems.

🧾 References & Acknowledgments

Advisor: Prof. Christopher Amato

Research Mentor: Andrea Baisero, Ph.D. Candidate

Affiliation: Khoury College of Computer Sciences, Northeastern University

- Andera's Asymmetric Actor Critic Paper: arXiv:2105.11674

- Github Repo (Main):https://github.com/abaisero/asym-rlpo

- Mine Github Repo: https://github.com/Ank-22/asym-rlpo/tree/feature/evaluation-algorithms

Special thanks to the Khoury Research Apprenticeship Program (Spring 2025) for supporting this project.

© 2025 Ankit Sinha.

All content, including figures and results, are based on my ongoing research under the guidance of Prof. Amato and Andrea Baisero.

Unauthorized reproduction or use is prohibited.